Foro Formación Hadoop

Formatos de ficheros/compresión HDFS

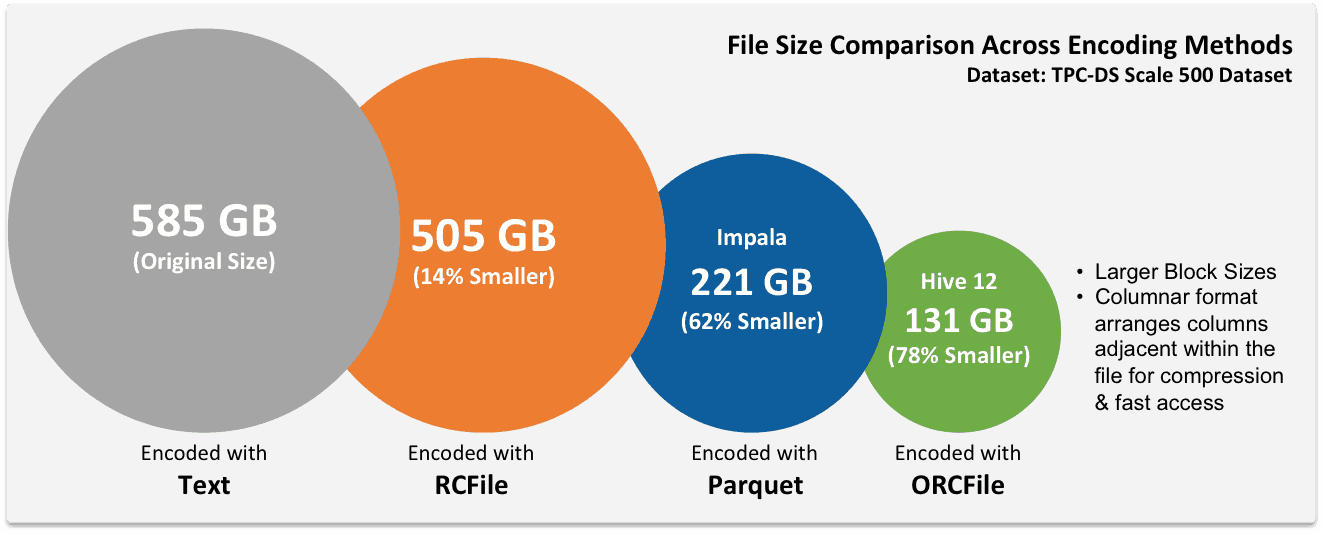

Comparación realizada por Hortonworks de los diferentes ficheros de Hadoop con su correspondiente compresión:

Fuente:http://hortonworks.com/blog/orcfile-in-hdp-2-better-compression-better-performance/

Benchmarking Apache Parquet

Narrow Results

The first test is the time it takes to create the narrow version of the Avro and Parquet file after it has been read into a DataFrame (three columns, 83.8 million rows). The Avro time was just 2 seconds faster on average, so the results were similar.

These charts show the execution time in seconds (lower numbers are better).

Test Case 1 – Creating the narrow dataset

The next test is a simple row count on the narrow data set (three columns, 83.8 million rows). The CSV count is shown just for comparison and to dissuade you from using uncompressed CSV in Hadoop. Avro and Parquet performed the same in this simple test.

Test Case 2 – Simple row count (narrow)

The GROUP BY query performs a more complex query on a subset of the columns. One of the columns in this dataset is a timestamp, and I wanted to sum the replacement_cost by day (not time). Since Avro does not support Date/Timestamp, I had to tweak the query to get the same results.

Parquet query:

|

1

2

3

4

|

val sums=sqlContext.sql("""select to_date(precise_ts) as day, sum(replacement_cost)

from narrow_parq

group by to_date(precise_ts)

""")

|

Avro query:

|

1

2

3

4

|

val a_sums=sqlContext.sql("""select to_date(from_unixtime(precise_ts/1000)) as day, sum(replacement_cost)

from narrow_avro

group by to_date(from_unixtime(precise_ts/1000))

""")

|

The results show Parquet query is 2.6 times faster than Avro.

Test Case 3 – GROUP BY query (narrow)

Next, I called a .map() on our DataFrame to simulate processing the entire dataset. The work performed in the map simply counts the number of columns present in each row and the query finds the distinct number of columns.

|

1

2

3

4

|

def numCols(x:Row):Int={

x.length

}

val numColumns=narrow_parq.rdd.map(numCols).distinct.collect

|

This is not a query that would be run in any real environment, but it forces processing all of the data. Again, Parquet is almost 2x faster than Avro.

Test Case 4 – Processing all narrow data

Test Case 4 – Processing all narrow data

The last comparison is the amount of disk space used. This chart shows the file size in bytes (lower numbers are better). The job was configured so Avro would utilize Snappy compression codec and the default Parquet settings were used.

Parquet was able to generate a smaller dataset than Avro by 25%.

Test Case 5 – Disk space analysis (narrow)

Wide Results

I performed similar queries against a much larger “wide” dataset. As a reminder, this dataset has 103 columns with 694 million rows and is 194GB in size.

The first test is the time to save the wide dataset in each format. Parquet was faster than Avro in each trial.

Test Case 1 – Creating the wide dataset

The row-count results on this dataset show Parquet clearly breaking away from Avro, with Parquet returning the results in under 3 seconds.

Test Case 2 – Simple row count (wide)

The more complicated GROUP BY query on this dataset shows Parquet as the clear leader.

Test Case 3 – GROUP BY query (wide)

The map() against the entire dataset again shows Parquet as the clear leader.

Test Case 4 – Processing all wide data

The final test, disk space results, are quite impressive for both formats: With Parquet, the 194GB CSV file was compressed to 4.7GB; and with Avro, to 16.9GB. That reflects an amazing 97.56% compression ratio for Parquet and an equally impressive 91.24% compression ratio for Avro.

Test Case 5 – Disk space analysis (wide)

El artículo completo en: http://blog.cloudera.com/blog/2016/04/benchmarking-apache-parquet-the-allstate-experience/

Redes sociales